Once again, global law firm K&L Gates LLP has been ranked among the world’s 100 leading data law firms by Global Data Review’s GDR 100. The annual list examines law firms’ privacy and data protection capabilities, use of IP and confidentiality laws to protect proprietary data, and the firm’s work on all other personal and non-personal data laws at a global level.

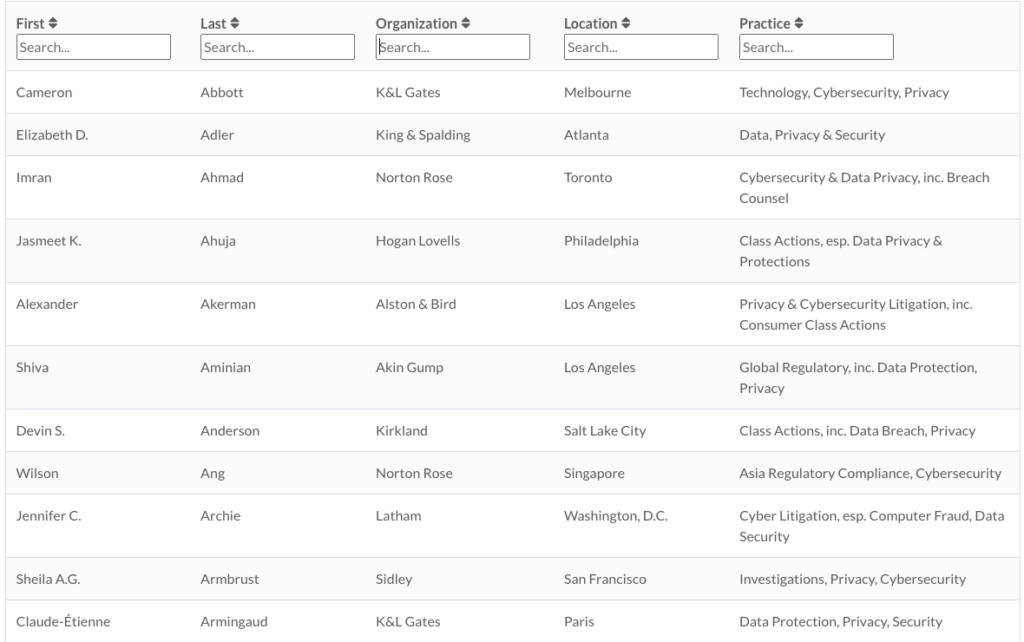

Nearly two dozen K&L Gates lawyers were recognized in the 2024 GDR 100, including Paris partner Claude-Étienne Armingaud. Other partners leading the practice and identified in the profile include Melbourne partner Cameron Abbott, Seattle partners Shannan Frisbie, Whitney McCollum, David Bateman, and Carley Andrews, Washington, D.C., partner Bruce Heiman, Chicago partner Limo Cherian, London partner Sarah Turpin, and Research Triangle Park partners Gina Bertolini and Leah Richardson.

Clients provided positive feedback of their experience working with K&L Gates’ lawyers stating the team has “deep knowledge of privacy laws and regulations, but they also understand the business impact of their advice. This sets them apart from other firms in the market.”

K&L Gates’ Data Protection, Privacy, and Security practice boasts more than 60 lawyers and professionals with experience in various technologies and methodologies. From assessing risk to incident response, breach, and crisis counseling globally, the team is qualified to handle most data privacy and security compliance issues. The practice also assists with cross-border mergers and acquisitions and specialized services focused on emerging areas such as biometric data compliance and defense.

The full K&L Gates profile can be read at Global Data Review (subscription required).